The major communication modalities used by social robots have been speech, gesture, and facial expression, which do not involve direct physical contact between the robot and human. Furthermore, in most interactions there is a clearly defined piece of information that the robot wants to convey to the human. In social human-human interactions, however, more subtle information such as emotion and personality is often exchanged, sometimes through direct physical contacts. This talk highlights two ongoing projects in socially-aware physical human-robot interaction. The first project investigates how people perceive a mobile robot exhibiting different collision avoidance behaviors. Through nearly 500 human-robot crossing experiments in a controlled lab setting, we obtain statistically significant subjective and objective data on perceived safety and comfort of those behaviors, which serve as guidelines for designing motion planning algorithms for robots navigating in crowded environments. In the second project, we explore direct physical contact as a new modality for conveying emotion and personality. Despite the fact that we often observe (to our fear) people spontaneously touching robots that are not designed for direct contact with humans, this modality has rarely been used in social robots. I will describe the hardware platform and controller developed for human-robot hugging, and discuss its potential applications in the social robotics context.

Dr. Katsu Yamane is a Senior Scientist at Honda Research Institute USA. He received his B.S., M.S., and Ph.D. degrees in Mechanical Engineering in 1997, 1999, and 2002 respectively from the University of Tokyo, Japan. Prior to joining Honda in 2018, he was a Senior Research Scientist at Disney Research, an Associate Professor at the University of Tokyo, and a postdoctoral fellow at Carnegie Mellon University. Dr. Yamane is a recipient of King-Sun Fu Best Transactions Paper Award and Early Academic Career Award from IEEE Robotics and Automation Society, and Young Scientist Award from Ministry of Education, Japan. His research interests include physical human-robot interaction, humanoid robot control and motion synthesis, character animation, and human motion simulation.

Social interactions between robots and humans will be fundamental with the implementation of service robots in domestic scenarios, healthcare centers and even in industry 4.0. The social robots’ assistance must go far beyond the services that a virtual software could deliver, for example, through a mobile device. Social robots have the potential to merge social interact and physical responses embodying an action. This keynote will go through the examples, benefits and challenges of social robots and the added value that they should deliver.

In this keynote speech I would like to introduce our research activities.

Francesco Ferro is the CEO and co-founder of PAL Robotics, one of the top service robotics companies in the world, and a euRobotics aisbl Board Director. He received a BSc+MSc degree in Telecommunications Engineering at Politecnico di Torino in 2002 (Italy), a Master at ISEN (Lille, France) and an Executive MBA at the University of Barcelona (Spain) in 2011. Since 2004 he develops cutting-edge humanoid service robots at PAL Robotics. The Barcelona company has the mission of making people’s life easier by using robotics, and for more than 15 years it has developed high-tech service robots for Assistive and Industrial environments.

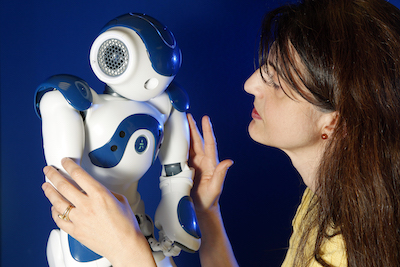

As robots are progressively entering our homes, work and social spaces, and everyday activities, the social robotics community is increasing its efforts to ascertain which social skills robots might need in different contexts, in order to be useful to and trusted by humans. Affective skills are an important element in successful social interactions, and friendliness is often posited as a desirable skill for social robots. However, designing and building friendly robots is not straightforward, and numerous questions arise: what is a friendly robot and do we always want one? When should a robot be friendly? How can robots be friendly in ways that different people like? In this talk, I will address these and related questions from the perspectives of embodied artificial intelligence, developmental robotics, and embodied affect. In particular, I will develop and illustrate how these approaches and their emphasis on notions such as embodiment, autonomy, adaptation, learning, and interaction, can help us design autonomous affective robots that can be – or become – friendly to us as a function of our interactions with them.

Lola Cañamero is Reader in Adaptive Systems and Head of the Embodied Emotion, Cognition and (Inter-)Action Lab in the School of Computer Science at the University of Hertfordshire in the UK, which she joined as faculty in 2001. She holds an undergraduate degree (Licenciatura) in Philosophy from the Complutense University of Madrid and a PhD in Computer Science (Artificial Intelligence) from the University of Paris-XI, France. She turned to Embodied AI and robotics as a postdoctoral fellow in the groups of Rodney Brooks at MIT (USA) and of Luc Steels at the VUB (Belgium). Since 1995, her research has investigated the interactions between motivation, emotion and embodied cognition and action from the perspectives of adaptation, development and evolution, using autonomous and social robots and artificial life simulations. Some of this research has been carried out as part of interdisciplinary projects where she has played Principal Investigator and coordinating roles, such as the EU-funded HUMAINE (on emotion-oriented information technology), FEELIX-GROWING (investigating emotion development in humans, non-human primates and robots), and ALIZ-E (development of social companions for children with diabetes), or currently the UH-funded Autonomous Robots as Embodied Models of Mental Disorders. She has played a pioneering role in nurturing the emotion modeling community. She is author or co-author of over 150 peer-reviewed publications in the above topics. Website: www.emotion-modeling.info.

© University of Hertfordshire